Training with Bonsai

Training Overview

Before beginning to train a reinforcement learning algorithm, you should ensure that you have reviewed Key Concepts About Reinforcement Learning. Before you begin training, be sure you have completed these tasks:

- You have created a brain in a Bonsai workspace, complete with the correct inkling code.

- You have created a simulator, and that simulator is visible in the Bonsai workspace.

If you haven't completed either of these tasks, you won't be able to train. These tasks are covered in Getting Started with Bonsai.

How to Begin Training

To begin training, follow these steps:

- Go to your Bonsai workspace.

- In the panel on the left, choose which brain you want to train.

- In the panel on the right, click on Learned Concept box. This box has a icon in the top-left corner.

- Click the green Train button in the top-left corner of the right panel.

- If your Inkling code doesn't specify a simulator to use, then a menu will appear, where you can choose a simulator.

The JobScheduling sample contains a Programmed Concept. You must build that concept before you will be able to train. To build that concept, follow these steps:

- In the panel on the right, click on the Programmed Concept box. This box has a icon in the top-left corner.

- Click the green Build button in the top-left corner of the right panel.

- Once the build has completed, you can click on the Learning Concept box and train as normal.

Monitoring Training

When you begin training, the UI will transition to the Train tab for the brain being trained. If you navigate away from this interface, you can get back by following these steps:

- In the right panel, click on the bran currently being trained.

- In the middle panel, click on the Train tab, next to the Teach tab.

- In the left panel, click on the box for the Learned Concept. The middle panel will show data for that concept.

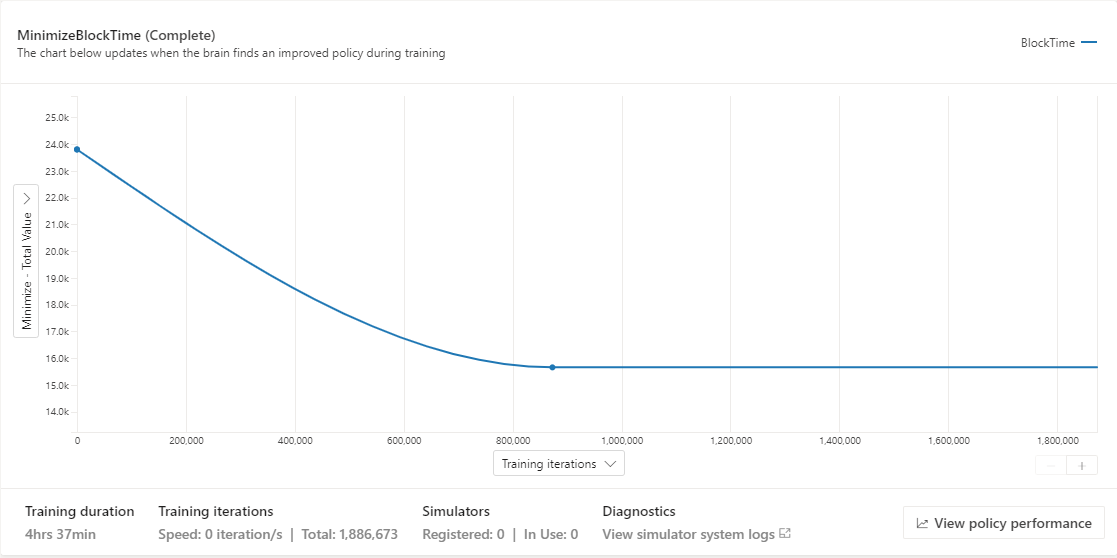

There are areas that help you monitor Bonsai's progress. The first is the concept performance chart:

This chart shows the performance of the Learned Concept over the course of training. It can be helpful to change the Y axis to show statistics about the goals of the concept. Be aware that this area doesn't update with data very often. In the example above, the two datapoints are separated by about 850,000 iterations.

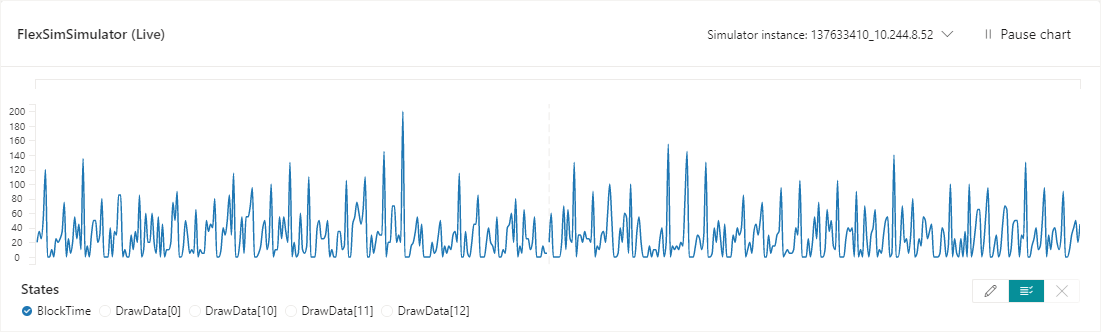

Another helpful area is the simulation status chart:

This chart can show any value from your observation space for any instance being used to train. This chart can be used to verify that the values Bonsai is getting from the model line up with your expectations.

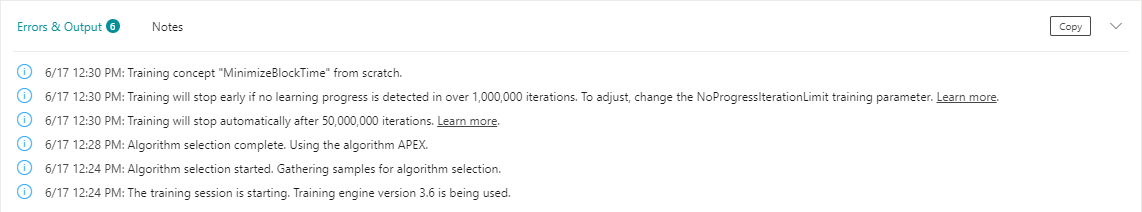

The final area discussed here is the Notes and Output tab, at the bottom of the page:

This area display messages from Bonsai. If there is a mismatch between the inkling code and the simulator, then errors will appear in this area.

How to Stop Training

Bonsai may stop training for the following reasons:

- You press the Stop Training button in the Bonsai interface.

- Bonsai doesn't detect an improvement, reaching its NoProgressIterationLimit.

- Bonsai reaches its TotalIterationLimit.

When you are testing the connection between FlexSim and Bonsai, you may choose to stop training early, once you have verified that training is possible. You may also choose to stop training early if you notice that your observation values don't match your expectations.

How to Improve Training

The goal of training is to produce an agent that performs well. However, many factors can influence how long it takes Bonsai to learn to make that agent. Here are a few common reasons that Bonsai may not perform as expected:

- The observation space contains unhelpful data. It can be tempting to add unneeded data to the observation space. This can make training take much longer because Bonsai will need to learn which values matter and which ones it can ignore.

- The observation space doesn't contain helpful data. The observation space should contain data that Bonsai can use to make intelligent actions. Like a detective, Bonsai can learn from relatively little information, but will reach much better conclusions if all the key information is present.

- Bonsai can't observe the impact of its decisions. Like the previous point, this deals with what values are in the observations space. The observation space should include some data about how the simulation is performing. In addition, the simulation needs to run long enough that Bonsai can see the effect of its actions. For example, if poor actions early in a simulated day can cause problems later in the day, then Bonsai needs to be able to observe the simulation for the whole day.

- The goals are defined improperly. Bonsai will learn about your simulation and create an agent that performs well, according to the goals you specify. Sometimes, it is possible for Bonsai to achieve the stated goal without improving the system. For example, if you give Bonsai a goal to minimize equipment idle time, it may unexpectedly choose to sacrifice another metric, such as overall throughput. In this case, you can change or add goals to point Bonsai in a better direction.

It can take many attempts to create a brain and simulator that can train a good agent. For this reason, Bonsai allows you to make multiple versions of each brain. You can also make notes on each brain, and you can train multiple versions simultaneously. This allows you to test multiple changes at once, such as different goals, different episode/iteration limits, or even different simulators.

Once you have trained a brain, continue to the next topic: Using a Trained Brain.